Apple released the Vision Pro at the perfect time

And why the $3,499 price tag shouldn't surprise you

Welcome back to Year 2049! 🔮

If this was forwarded to you, subscribe to get weekly analysis and insights about the technologies and trends shaping our future.

My first time trying out a VR headset felt surreal and unsettling. I was an astronaut hopping around a planet in outer space with no gravity. My head was spinning, I was dizzy, and I almost ran into the TV. But I was having the time of my life.

You vividly remember your first time trying out a VR headset or an AR experience, because it’s remarkable. How do we make a simulated environment feel so real? How do we effortlessly blend the digital world with our physical one?

But for some reason, AR and VR haven’t become the mainstream success that companies like Google, Microsoft, or Meta expected as they kept pouring time and money into them. They were innovative, but maybe they were ahead of their time.

Innovation isn’t enough for products to succeed. They must also come at the right time. Has Apple released the right headset at the right time? I believe so, for three reasons:

Their developer-first focus

The rise of generative AI tools that lower development barriers

The hardware advancements that enable a magical user experience

Let’s dive in.

#1: Their developer-first focus

If you gasped at the Vision Pro’s whopping price of $3,499 USD, that’s what Apple intended.

The Vision Pro isn’t for the average consumer. It’s for developers and businesses who can afford to invest that money to lay their bricks in Apple’s new AR/VR ecosystem. It’s the price of entry to build one of the first apps in this new territory, an opportunity that you rarely get.

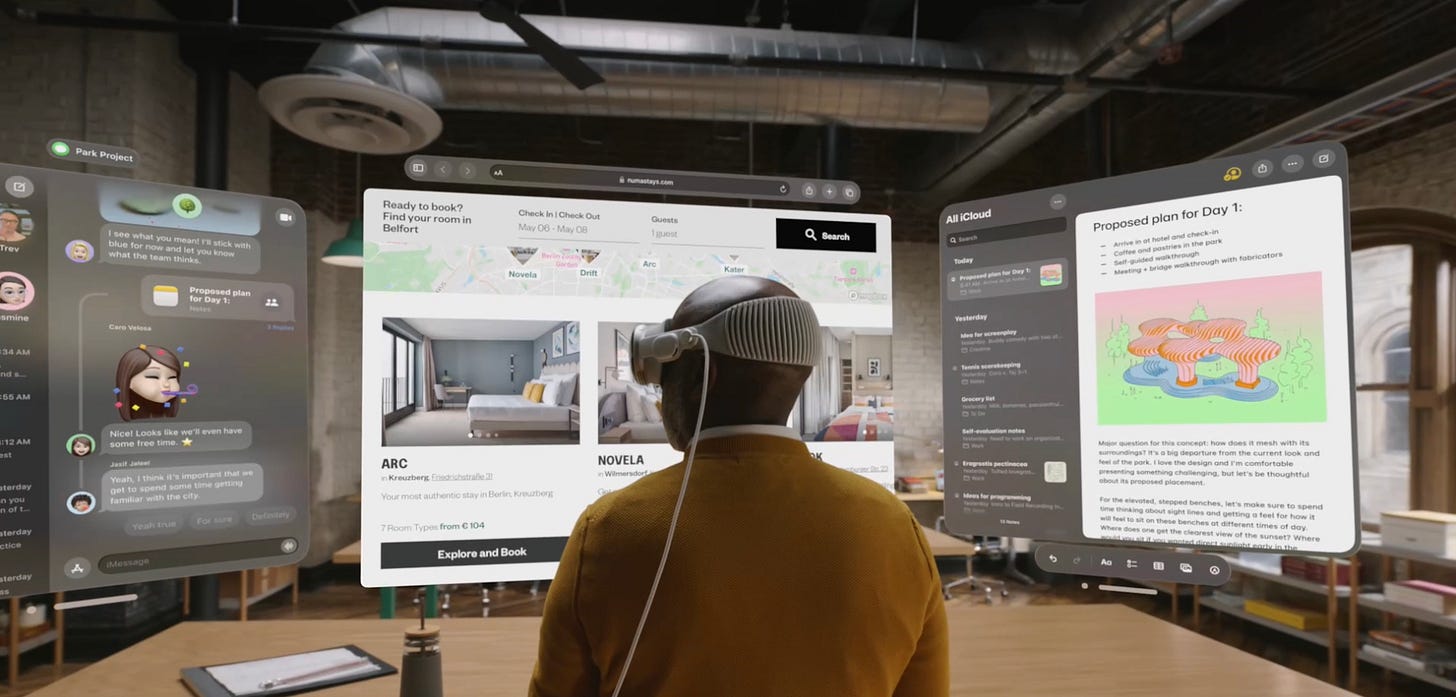

Apple mostly showcased its own apps in its keynote event on June 5th, and they looked familiar: flat 2D screens of browsers, emails, and messages.

They offered some hints and glimpses of 3D experiences, like watching an NFL game.

For the Vision Pro (or any other headset) to succeed, it needs a library of apps, experiences, and content that incentivize customers to purchase it.

It’s hard to gain mass adoption before that library is available. It can even be detrimental:

Customers complain about the lack of apps or content to consume

Complaints reduce the demand for the headset and discourage others from buying

Lower sales scare off developers and businesses from investing time and money in creating their own apps

The headset is inducted into the tech graveyard

But Apple’s pricing strategy solves that problem in an unusual way.

By making the price tag so unappealing to the average person, the first customers will most likely be developers or businesses. They’re willing to splurge on an expensive headset, become the first movers, and create apps or content that will translate to monetization opportunities in the future. Remember that some of the first movers on the iOS App Store became massive businesses and franchises (Angry Birds, anyone?).

Even the name, Apple Vision Pro, implies that this is the higher-end model and that we should expect a cheaper “Apple Vision” headset in the near future.

According to The Information, Apple will ship less than 500,000 Vision Pros in its first year. For context, 1.4 million iPhones were sold in 2007 and 200+ million were sold last year.

A rich library of apps, experiences, and content will drive Apple Vision Pro demand and sales. Apple’s premium pricing reflects its prioritization of businesses and developers to achieve that.

#2: The rise of generative AI tools that lower barriers to creation

We discussed the need to create apps and content for AR and VR, but we didn’t talk about the complexity.

The technical effort required to create a high-quality experience in AR or VR is significant because it requires building in a 3D space rather than a 2D screen. You either need to create a convincing and fluid virtual world (VR) or seamlessly blend the digital and the physical (AR).

The convergence of generative AI and Mixed Reality will be critical. Generative AI can drastically reduce the time and effort needed to develop applications and experiences in both AR and VR. These tools can turbocharge the creation of realistic worlds, characters, and objects and bring them to life in a fraction of the time.

We’re seeing this trend become more apparent in recent months:

Apple is reportedly developing a no-code tool that will allow developers (and even non-developers) to build their own mixed reality apps without code and launch them on the App Store. Supposedly, we’ll be able to give vocal instructions to Siri on what app we want to build (a true Jarvis experience!).

Unity was revealed as one of Apple’s first partners when developing the Vision Pro. Recently, the company announced its generative AI tools, like Inworld AI, that help people create game characters without programming or scripting their dialogue.

Companies like Luma AI have built tools to easily create 3D environments from text, images, and videos that you provide. The most promising technology here is NeRF, which can render 3D objects or environments from a few pictures or even a video.

These are just a few examples of generative AI tools and software being built to facilitate AR and VR development.

Lowering technical barriers makes developers’ lives easier (since Apple wants to prioritize them), but it also increases access to non-technical creatives who want to build apps or create content for the Apple Vision Pro.

#3: The hardware advancements enable a magical user experience

The Vision Pro pushes the envelope from a design perspective.

The most impressive feature is the ability to interact with your hands, eyes, and voice without any controllers. You can literally look, point, and gesture with your fingers in the air to interact with objects in this alternate reality. How mind-blowing is that? (And 16 years ago, we were baffled when Steve Jobs showed us how we could scroll on the first iPhone).

Some other impressive design features:

The dial that lets you switch between AR and VR

The display that reveals/hides your eyes so others know whether you’re (I can’t believe they called it EyeSight and not iSight)

Spatial audio

Higher resolution displays in each eye

All of these features are made possible by some impressive hardware enhancements.

The headset is packed with cameras and sensors that offer precise head-tracking, hand-tracking, and 3D mapping of your surroundings. It’s also powered by two chips that divide and conquer the tasks of processing inputs from cameras and sensors, and rendering the digital elements you interact with.

Apple casually mentioned that they filed 5,000 patents while developing the Vision Pro.

All these enhancements contribute to one goal: creating the “magical” user experience that some have described so far. Rendering a fluid and responsive alternate reality and making it easy to interact with it will make a headset stand out among the rest.

Tell me what you think of the Apple Vision Pro: is it overhyped or does it mark a technological inflection point like the iPhone did?

Thank you for reading Year 2049! If you enjoyed this story, please share it with your friends, family, or colleagues and invite them to become free subscribers.

If this was forwarded to you, subscribe to receive weekly analysis, insights, and resources about the technologies and trends shaping our future.

How would you rate this week's edition?