📈 AI's Exponential Renaissance

Part 3 of 3: the perfect storm that made AI succeed (and the argument that started OpenAI)

Hey friends 👋

This is Part 3 of 3 of my mini-series on the history of AI until today. Writing these has been loads of fun and I hope you’ve been enjoying them.

Also, I’ve added a quick anonymous poll at the end of the story to gauge interest on an idea I had. Make sure to vote! 👀

Stay curious,

– Fawzi

Today’s Edition

Main Story: AI’s Exponential Renaissance

Bonus: AI course + another idea I had

Read Part 1: Sparks of Intelligence

Read Part 2: The Dark Winter Rollercoaster

Part 3: The Exponential Renaissance

The darkest hour is just before dawn.

After AI funding came crashing down in the early 90s and the industry entered its second winter, researchers wanted to distance themselves from the two words that carried so much baggage and failed expectations.

"Computer scientists and software engineers avoided the term artificial intelligence for fear of being viewed as wild-eyed dreamers."

– John Markoff, New York Times in 2005 (Source)

40 years after the Dartmouth College workshop that birthed the AI field, researchers were at rock-bottom again. Confidence in AI was at an all-time low. Millions of dollars of funding got pulled and redirected to other areas of research. On paper, there were some fruitful advancements and breakthroughs. But none had the revolutionary impact or scale that was promised.

In the darkness, the perfect technological convergence was slowly approaching. The patience and perseverance of AI believers was about to be rewarded.

The Perfect Storm

Three revolutions were slowly converging to create the perfect storm for AI’s success:

#1: The Computational Power Revolution

Moore's Law, proposed by Gordon Moore in 1965, is an observation (and prediction) that the number of transistors on a microchip would double roughly every two years, leading to exponential growth in computational power. It has held true since it was declared.

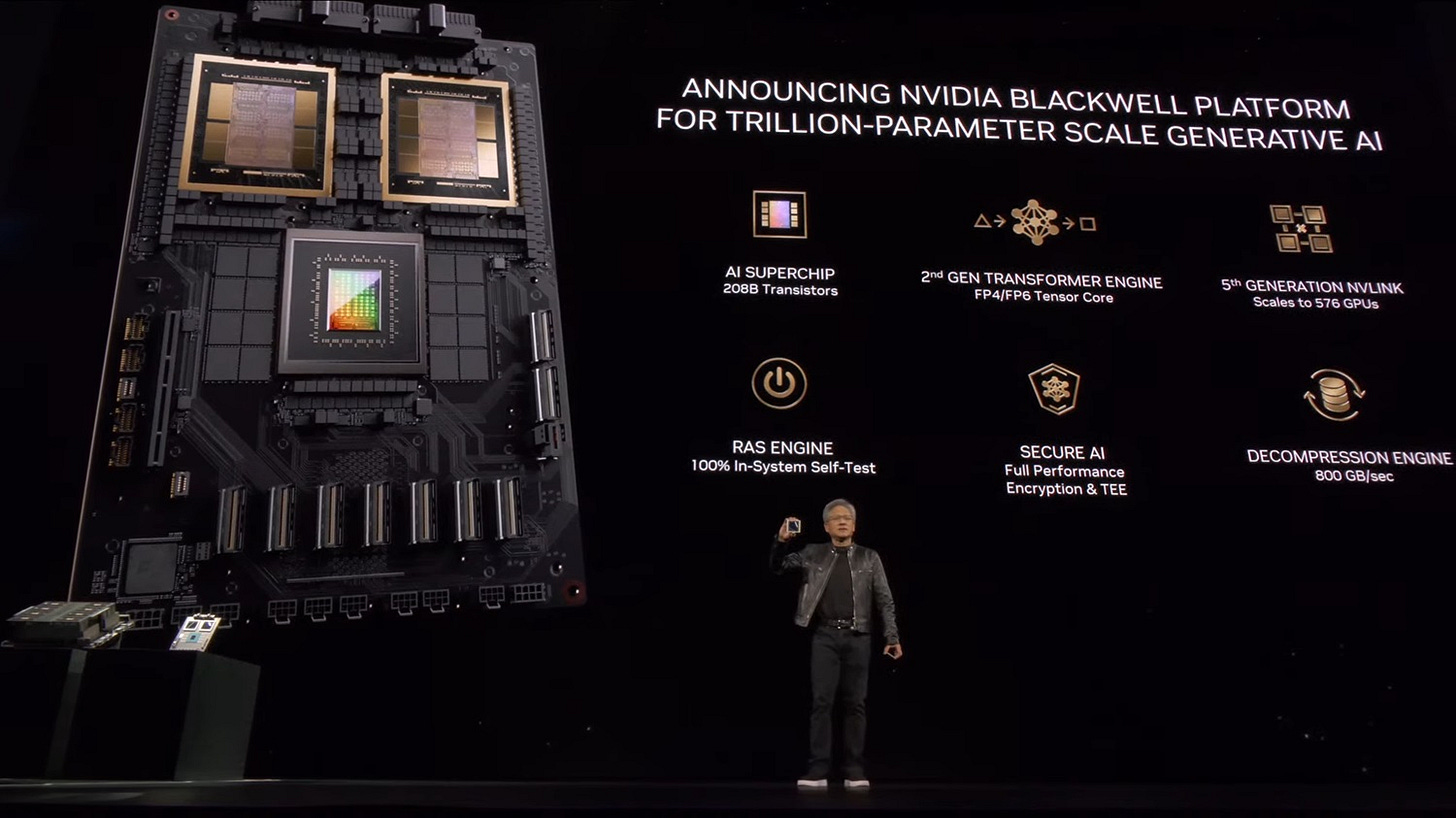

To give you an example, NVIDIA’s latest Blackwell GPU has 200 billion transistors and is the most powerful GPU today. Compared to other microchips from the past few decades, Blackwell is:

100x more powerful than 10 years ago (~2 billion transistors)

1,000x more powerful than 20 years ago (~200 million transistors)

70,000x more powerful than 30 years ago (~3 million transistors)

1,300,000x more powerful than 40 years ago (~150,000 transistors)

40,000,000x more powerful than 50 years ago (~5,000 transistors)

This chart, created by Our World in Data, shows how this has led to an exponential rise in the power of AI systems over the decades.

#2: The Big Data Revolution

The internet, social media, and the digitalization of nearly all aspects of life led to an unprecedented avalanche of data. The internet also facilitated the availability of that data to researchers around the world. They could now easily scrape, gather, categorize, and share large training datasets.

This data became the lifeblood of machine learning algorithms, allowing them to learn, improve, and make more accurate predictions. ImageNet, started by Fei-Fei Lee in 2006, is a training dataset containing more than 14 million images that have been hand-annotated across 20,000 object categories. Talk about a labour of love.

#3: The Connectionist AI Revolution

If you recall from Part 2, there was a rift in the AI community between two schools of thought:

Symbolic AI (aka the “top-down approach”) advocated for the use of rules, symbols, and logic to explicitly program intelligent systems. Decision trees and expert systems are examples of this approach.

Connectionist AI (aka the “bottom-up approach”) promoted the idea of letting machines learn from data and identify their own patterns, instead of explicit programming. This area was focused on machine learning, neural networks, and deep learning.

The symbolic philosophy was the “popular” one up until the second AI winter and the failure of expert systems such as XCON.

The rise in computational power and availability of large dataset gave the connectionist approach a new lifeline.

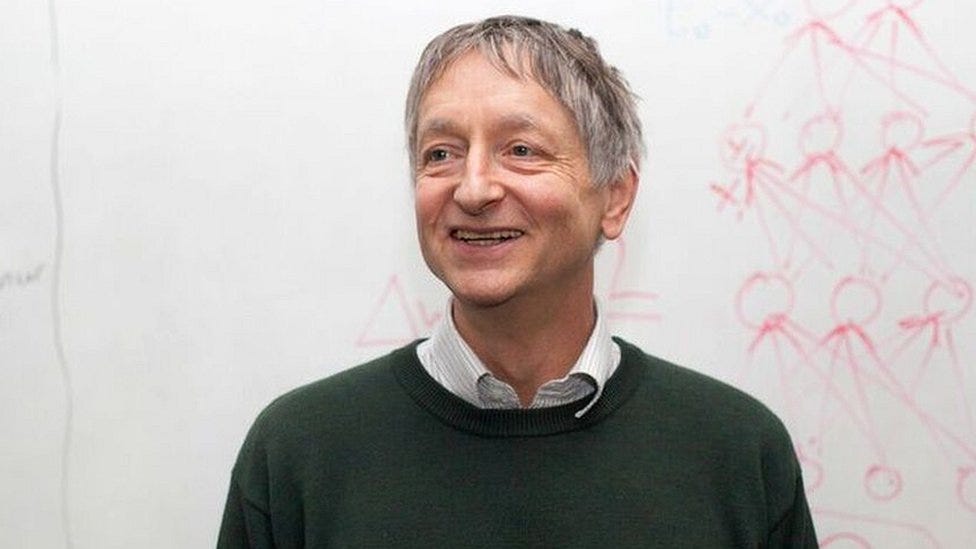

Geoffrey Hinton, who won the Nobel Prize in Physics this week, was one of the few early believers in the connectionist philosophy and neural networks. Hinton, along with fellow researchers David Rumelhart and Ronald J. Williams had developed the backpropagation algorithm in 1982, which became an essential component of machine learning research and development efforts for the decades that followed.

Incognito Mode

The AI field learned the consequences of overpromising. Up until the second AI winter, many researchers set out to create systems with general intelligence that would be as smart (or smarter) than humans.

This time, they decided to keep their feet on the ground and shifted their focus to narrow intelligence (or narrow AI). Instead of trying to build and replicate the complexities of general intelligence, they built specialized systems to perform specific tasks effectively.

An early success of this approach was Deep Blue, a chess-playing computer developed by IBM, which famously defeated world chess champion Garry Kasparov in 1997. This victory demonstrated the power and potential of narrow AI.

Researchers now combined:

Powerful Computers + Large Datasets + Improved Learning Algorithms + Specific Tasks

It was the perfect recipe.

By focusing on narrow AI, researchers were able to address immediate needs. Different AI techniques were at the foundation of major applications at the time like fraud detection in online banking and spam detection in email.

However, the AI field didn’t receive credit for these contributions. Researchers were trying to rebrand AI because of its past failures, and the success of fraud and spam detection were often seen as industry-specific advancements rather than AI specifically.

AI was working hard behind-the-scenes in incognito mode.

A lot of cutting-edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore.

– Nick Bostrom, in 2006

AlexNet and the Deep Learning boom

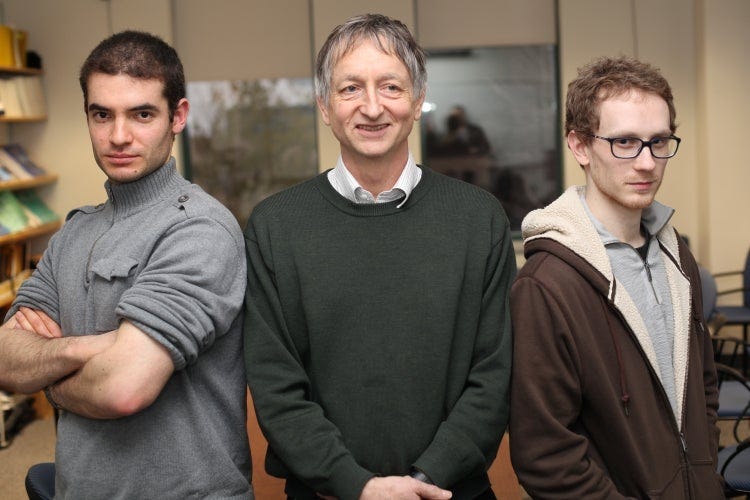

The breakthrough that marked a major turning point in AI history came in 2012 with the development of AlexNet, created by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, who was their supervisor.

AlexNet was an image recognition model that won the 2012 ImageNet competition. AlexNet outperformed its competitors by a significant margin, and only had an error rate of 15% which was 10% lower than the runner-up.

Take a look at the leap in performance between AlexNet and its competitors from the same year and the winners from the two preceding years.

The AlexNet team did something differently than other teams: they used two NVIDIA GPUs to train their model on millions of images. This was much faster than the typical approach of using a CPU, because they had slower processing speeds and could only run tasks in sequence. GPUs are designed for parallel processing, making them much more efficient for training deep neural networks on large amounts of data.

The success of AlexNet ignited interest and investments into deep learning. AI slowly started playing a bigger role in our lives as big tech companies jumped on the train:

Voice Assistants: Siri, Google Assistant, and Alexa became integrated into every device around us, enabling us to ask questions and give commands using our voice only.

Recommendation Systems: Platforms like Netflix and Amazon utilized AI to analyze user preferences and provide personalized content, improving user experience and engagement.

Self-Driving Cars: Companies like Tesla and Waymo built and trained cars to process their environment and make autonomous driving decisions.

Even during this time, AI was a subtle layer behind the majority of these applications. People didn’t care if AI was behind the hood or not. They just wanted useful applications that solved their immediate needs.

The argument that started OpenAI

The most consequential event in AI’s history might’ve occurred at Elon Musk’s 44th birthday in 2015.

During the party, Elon and his close friend (and Google co-founder) Larry Page, got into a heated argument about the future and dangers of AI.

While Musk argued that safeguards were needed to prevent A.I. from potentially eliminating the human race, Page retorted that machines surpassing humans in intelligence was the next stage of evolution

– Alexandra Tremayne-Pengelly in The Observer

The argument followed Google’s acquisition of the prominent AI research lab/startup DeepMind in 2014. DeepMind's success and talent attracted significant attention, and the sale to Google raised concerns about the concentration of AI advancements within a tech behemoth that was profit-driven.

In response to these concerns, Elon Musk, Sam Altman, and others founded OpenAI in 2015 with the goal of ensuring that "artificial general intelligence would benefit all of humanity". OpenAI positioned itself as an open research organization, committed to transparency and collaboration, sharing its findings to promote the safe development of AI.

Since 2015, DeepMind and OpenAI have been pioneers in AI research and their work has already had a global impact on our lives:

DeepMind: AlphaGo defeated the world Go champion in 2016, showcasing the power of deep reinforcement learning, followed by AlphaFold, which solved the complex problem of protein folding, revolutionizing biological research.

OpenAI: Developed the GPT series of large language models, with GPT-3 and GPT-4 revolutionizing natural language processing. They also released a little tool called ChatGPT that brought AI to the mainstream.

AI is not in the shadows anymore. Today, it’s in the headlines and on everyone’s minds.

Big tech companies are racing to reap the fruits of consumer excitement. Startups have spotted a window of opportunity to innovate and dethrone the incumbents. People are torn between the benefits AI can provide, the harms it creates, and most importantly, the existential question:

“If AI can do it all, what am I good for?”

The End.

It feels like the real story is just beginning.

🚨 Your feedback is needed 🚨

I realize there are many different terms, acronyms, and other jargon used when talking about AI. I’m thinking of creating a mini-book/glossary explaining what each term or concept means, why it’s important, along with a real-life example or application. Is that something you’re interested in?

One final note

Thank you for following this 3-part series on the history of AI. I think it’s crucial for all of us to understand history, especially when it’s about a technology that seems to be creeping into more aspects of our lives every day.

This series is part of my upcoming “What you need to know about AI” course. I’m making this course for non-technical people who would like to build a strong understanding of what AI is and how it works, and I plan on offering it for free.

Knowledge gives us a sense of clarity and confidence, and eliminates fears and misconceptions that we might’ve had. If you’re interested, you can sign up for the waitlist below.

🔮 Share this article with someone

Share this post in your group chats with friends, family, and coworkers.

If a friend sent this to you, subscribe for free to receive practical insights, case studies, and resources to help you understand and embrace AI in your life and work.

⏮️ What you may have missed

If you missed Part 1 and Part 2:

You can also check out the Year 2049 archive to browse all previous case studies, insights, and tutorials.