The top 5 AI quotes from Collision 2024

Geoffrey Hinton, Maria Sharapova, and others share memorable lines about the technology we can't stop talking about

I attended the Collision conference in Toronto last week where AI was the hot topic (unsurprisingly). Out of the myriad of opinions and predictions, a handful stood out to me that I had to share with the rest of you.

1. Keep the hand of the artist in the creative process – Philip Hunt, Studio AKA

Philip Hunt, the Oscar-nominated Creative Director from Studio AKA, shared his thoughts on AI in the creative process. The one question on every creative’s mind is “Will AI take creative jobs?”.

Philip talked about how AI is much more beneficial at assisting and turbocharging creatives to complete their work faster. One example he shared was an experiment where AI was used to animate between different frames, replacing the need to draw every single frame by hand.

What resonated with me the most was his urge to keep the human element and the creative’s “hand” in the process. Animation, like every other form of art, needs to have a human touch to strike a chord and make us feel something. It’s not just about mass-producing AI-generated content quickly just because we can. Ultimately, people want to hear captivating and engaging stories, and that will only come from the humans behind the art.

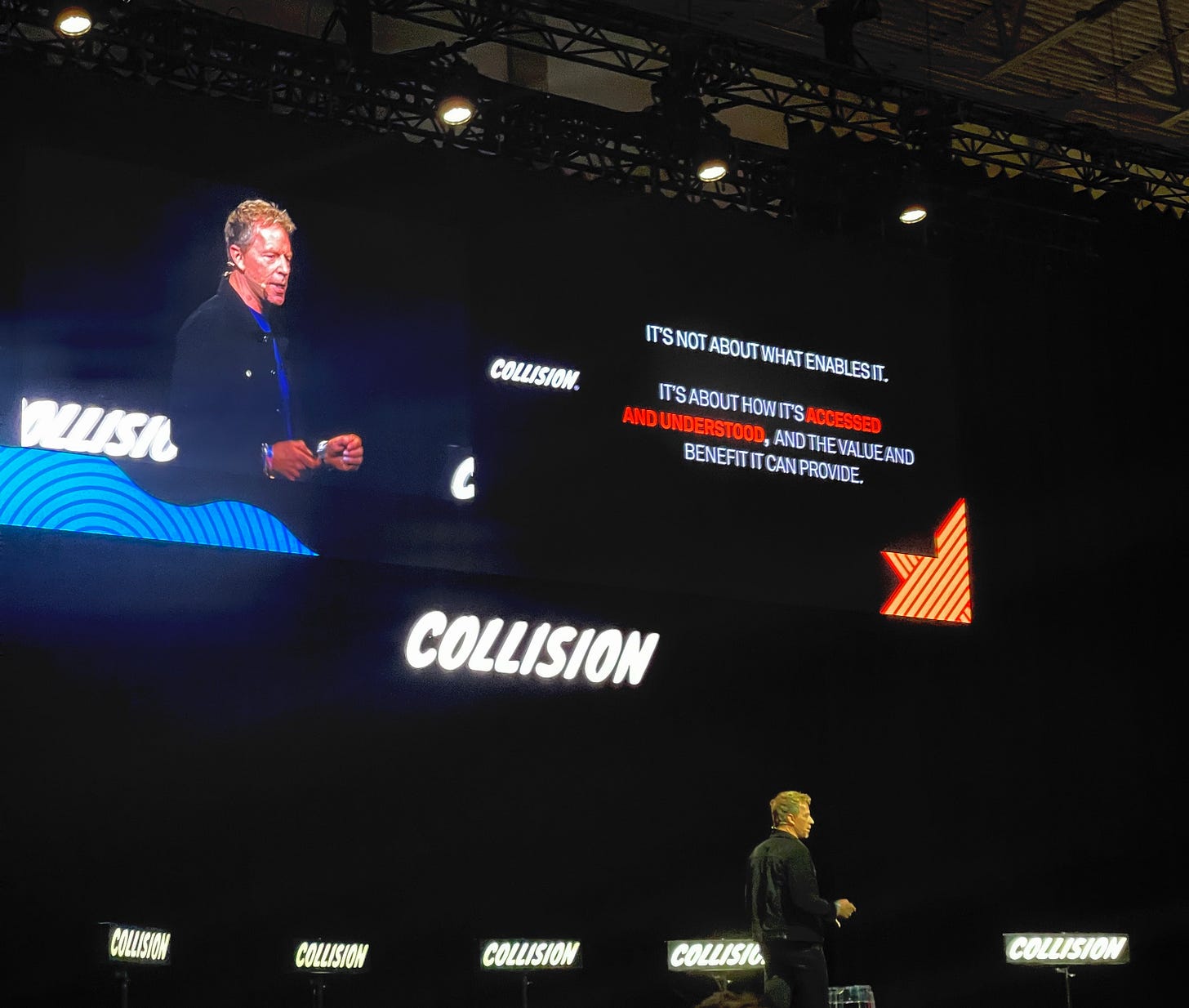

2. It’s not about what enables it, it’s about the value and benefit it can provide – Robert Brunner, Ammunition

Robert Brunner, the legendary industrial designer behind products like Beats by Dr. Dre, was another standout this week.

Maybe my bias as a designer is creeping in, but he pointed at one of the biggest issues I see with AI deployments today. Robert said:

It’s not about what enables it. It’s about how it’s accessed and understood, and the value and benefit it can provide.

The problem with being “AI-first” is that it makes us too focused on what enables our product or solution (AI in this case), instead of focusing on solving a problem and providing value to people no matter the technology.

3. AI gives the illusion of accuracy without the obligation of accuracy – Dan Rosensweig, Chegg

Chegg’s CEO was blunt and honest in pointing out generative AI’s biggest weaknesses. AI can generate anything you want like text, images, videos, and even music. But it doesn’t guarantee accuracy, which is paramount in the context of education.

At this point, most of us have encountered some sort of AI hallucination when using ChatGPT. Dan suggests that companies should lean into their expertise to build more reliable and accurate systems using their proprietary data. Not only does that help companies more unique and reliable AI experiences, but it keeps them relevant against big players like OpenAI and Google.

In Chegg’s case, it meant leveraging the thousands of questions and answers in their library to provide a reliable learning assistant for students. OpenAI, Claude, or Gemini will never replace every app or software because they are general-purpose tools that aren’t optimized for specific use cases. When you have a product that serves a specific purpose and audience, you can create a better user experience and improve the quality of responses by leveraging your unique data and expertise.

4. “My coach would probably have more time to have dinner with his family” – Maria Sharapova

When asked about how AI could benefit her as a player today, Maria Sharapova’s immediate answer revolved around her coach.

Back in her playing days, her coach would spend hours collecting footage and other stats about her opponents to prepare her before a match. If her coach had access to today’s technology, he would probably have more time to have dinner with his family.

This simple sentence made me realize that this is what technology should be about: assisting us in making our work easier, so we can have time to do more meaningful things like spending time with our loved ones.

5. “The problem is always us” – Geoffrey Hinton

The Godfather of AI, Geoffrey Hinton, also made an appearance. His concerns about the risks and dangers of AI have been well-documented over the past year, and he reiterated them during his talk. He’s concerned about:

Deepfakes to mislead voters during elections

Lethal autonomous weapons

Job losses and the widening of the gap between rich and poor

Surveillance from authoritarian regimes

Cybercrime

Bioterrorism

The existential threat of AI going rogue and taking over

The list of risks gave me a minor panic attack while I listened to him. After all, he is the biggest expert in the world on the topic and we should listen to him carefully.

But he ended his talk on an optimistic note, saying that there’s an equal amount of good outcomes we could see from AI. One example he gave was the massive potential of AI being used in medicine to help diagnose people more accurately.

He ended the talk with this line: “The problem is always us”.

It’s a reminder that we should all play a proactive role in ensuring AI is being used to maximize the good outcomes and completely avoid the dangerous outcomes. Hinton’s recommendations included strong government regulations to make AI companies conduct rigorous testing, educating the public about AI and how it can be misused (like deepfakes), and being cautious when developing fully autonomous AI agents (even when they’re built for benevolent purposes).

🔮 The future is too exciting to keep to yourself

Share this post in your group chats with friends, family, and coworkers.

If a friend sent this to you, subscribe for free to receive practical insights, case studies, and resources to help you understand and embrace AI in your life and work.