🥊 The OpenAI vs Google saga continues

Everything you need to know about Gemini 1.5 Pro and Sora

Welcome back to Year 2049, your source of practical insights, case studies, and resources to help you embrace and harness the power of AI in your life, work, and business.

If this was forwarded to you, you can subscribe to receive Year 2049 in your inbox every Friday.

Updates

Before we get into today’s issue, some updates for you:

Building custom AI assistants and automations with no code: It’s been super exciting and motivating to see everyone’s interest in this in-depth course! As a reminder, you’ll get early access and 50% off the course I’m currently building by answering a few short questions.

AI’s big day

Just as we start settling down and normalizing the latest AI advancements, OpenAI and Google keep surprising us and pushing us into uncharted territories.

Yesterday was one of the most interesting rounds of this never-ending saga between the two companies.

Google introduced Gemini 1.5, its latest family of multimodal models. A few hours later, OpenAI teased its text-to-video model, Sora.

It’s safe to say that Sora overtook the headlines, casting a giant shadow on Google’s impressive breakthroughs in its latest Gemini model.

Here at Year 2049, I like to give equal attention to the amazing work that both companies are putting out. So, here’s everything you need to know about Gemini 1.5 Pro and Sora so you can explain it to all your family, friends, or coworkers over the long weekend.

Everything you need to know about Google’s Gemini 1.5 Pro

Because AI model names are getting confusing, here’s a quick refresher:

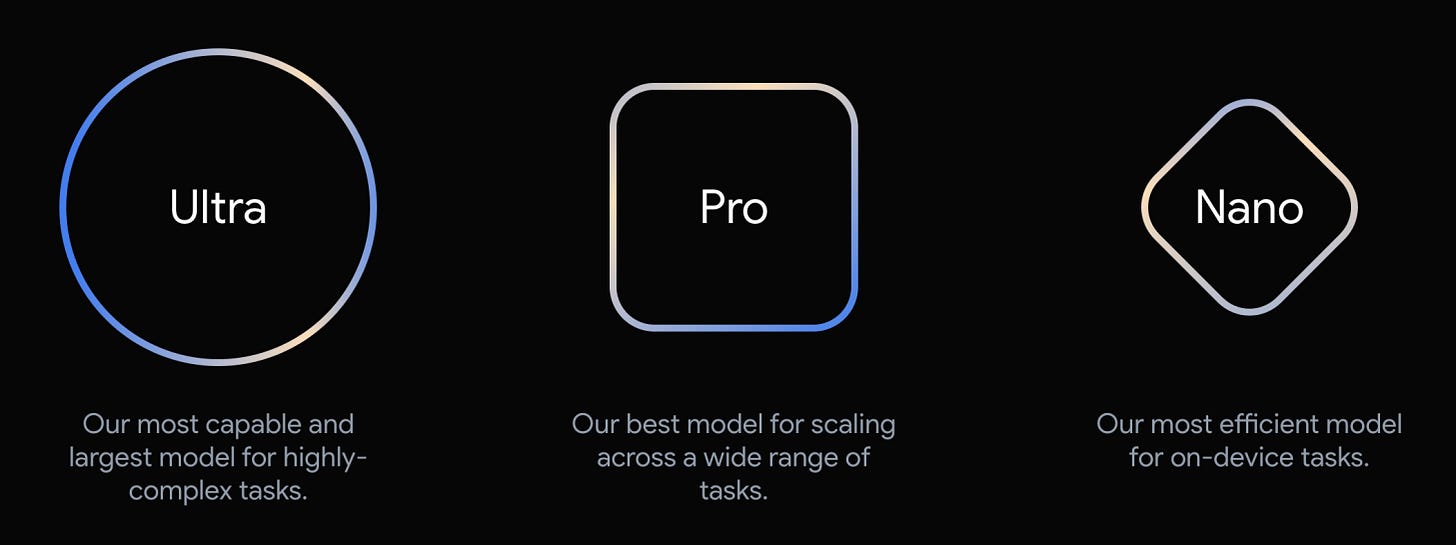

Google’s Gemini models come in three sizes:

Nano → the small-size model, meant to be run locally on smartphones for simple tasks

Pro → the mid-size model, meant for a wide range of tasks

Ultra → the large-size and most capable model

These aren’t to be confused with Gemini Advanced which is the name of the paid plan to use the Gemini chatbot (the equivalent of ChatGPT Plus).

Yesterday, Google introduced Gemini 1.5 which follows the family of Gemini 1.0 models. According to their press release, “1.5 Pro achieves comparable quality to 1.0 Ultra, while using less compute”.

A rundown of 1.5 Pro’s breakthroughs:

1 million token context window: 1.5 Pro can process up to 1 million tokens of context, which is the longest context window of any foundation model at the moment (GPT-4 has a 128k token context window). For reference, 1 million tokens is equivalent to:

1 hour of video, or

11 hours of audio, or

700,000 words

10 million token context window (⁉️): In Google’s testing, they’ve successfully tested up to 10 million tokens of context. However, they didn’t clearly state whether that would be rolled out. Only the standard 128k and new 1M token context windows are expected to be available.

Architecture: 1.5 Pro is built on a combination of Transformer and Mixture-of-Experts (MoE) architecture. I won’t get into the technical details, but MoE is an additional layer that activates relevant “expert pathways” in the model’s neural network based on the user’s prompt.

Performance: 1.5 Pro outperforms 1.0 Pro but performs at a similar level to 1.0 Ultra, the main advantage being it uses much less compute. The model also maintains its performance even when the context window increases.

Must-watch videos:

Everything you need to know about OpenAI’s Sora

OpenAI quickly overtook Google’s spotlight by teasing its first text-to-video model, Sora.

If you spent any time online yesterday, it was almost impossible to ignore the jaw-dropping videos that Sora generated. My personal favourite was the Pixar animation-style video of the monster and the candle.

If you haven’t yet, I encourage you to go to the Sora announcement page and watch all the short videos they published. They’re truly impressive. If you look closely enough, you’ll see some imperfections. But I probably wouldn’t think it was AI-generated if someone sent it to me with no context.

What you need to know:

Availability: Sora is only available to a “red team” that is currently testing it and trying to find vulnerabilities or weaknesses that need to be fixed before a wider release. OpenAI also said it’s testing the tool with a select group of artists, designers, and filmmakers.

Video Length: Sora can generate videos up to a minute long.

Complex and detailed scenes: The example videos that were shared demonstrate Sora’s ability to generate detailed characters, scenes, and landscapes while maintaining a sense of realism. One of the videos even displays an impressive reflection of the glass inside a train.

AI-generated labelling: OpenAI will include C2PA metadata on each video, which documents the creator behind each video, if it was AI-generated, along with other important information. Google said it would also adopt C2PA earlier this month. Here’s a full list of companies following this standard.

Weaknesses: OpenAI says the model still has trouble simulating the physics of a complex scene or certain cause-and-effects. They shared some examples of prompts going wrong, like a guy running backward on a treadmill (but I’ve seen people do this at my gym so maybe it’s not entirely wrong???).

🎥 Videos of the Week

🧠 ChatGPT is finally getting a memory (TikTok | Instagram)

🔨 Fix anything in your house with Google Gemini (TikTok | Instagram)

🤗 Hugging Face now lets you build custom AI assistants with no code (TikTok | Instagram)

🔮 The future is too exciting to keep to yourself

Share this post in your group chats with friends, family, and coworkers.

If a friend sent this to you, subscribe for free to receive practical insights, case studies, and resources to help you understand and embrace AI in your life and work.

⏮️ What you may have missed

If you’re new here, here’s what else I published recently:

You can also check out the Year 2049 archive to browse all previous case studies, insights, and tutorials.

How would you rate this week's edition?