Hey friends 👋

Today’s post was inspired by a question I always hear:

Why are we developing AI in the first place?

I decided to go deep down the rabbit hole of AI’s 70-year history. I wanted to understand its origins and founding ideas, why it almost got defunded into oblivion (twice), and its exponential rise in our times.

I tried my best to summarize 70 years of history into a concise 10-minute read but it was impossible without leaving out important details. So, I decided to split it up into 3 smaller parts to make it easier to read with your morning coffee:

Part 1: Sparks of Intelligence

Part 2: AI’s Dark Winter Rollercoaster

Part 3: The Exponential Renaissance

I’m sharing Part 1 today. Part 2 and 3 will follow soon.

Stay curious,

– Fawzi

Part 1: Sparks of Intelligence

“They learn to speak, write, and do calculations. They have a phenomenal memory. If you were to read them a twenty-volume encyclopedia they could repeat the contents in order, but they never think up anything original.”

– Domin to Helena, Rossum’s Universal Robots by Karel Čapek (1920)

Our fascination with creating intelligent beings is anything but new.

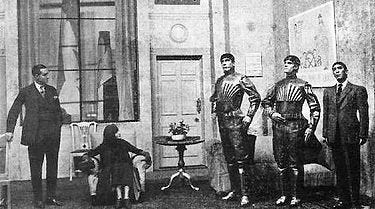

Karel Čapek’s 1920 play, Rossum’s Universal Robots (R.U.R.), accurately foreshadows the AI industry that would start blooming 30 years later.

Young Rossum, an engineer, decides to design and create the perfect worker. He invents the Robot, and his factory starts manufacturing thousands of them while selling them all over the world:

DOMIN: What do you think? From a practical standpoint, what is the best kind of worker?

HELENA: The best? Probably one who—who—who is honest—and dedicated.

DOMIN: No, it’s the one that’s the cheapest. The one with the fewest needs. Young Rossum successfully invented a worker with the smallest number of needs, but to do so he had to simplify him. He chucked everything not directly related to work, and in so doing he pretty much discarded the human being and created the Robot. My dear Miss Glory, Robots are not people. They are mechanically more perfect than we are, they have an astounding intellectual capacity, but they have no soul. Oh, Miss Glory, the creation of an engineer is technically more refined than the product of nature.

This play introduced the world to the term “robot”. The word was created by Čapek’s older brother Josef, and was derived from the Czech word “robota” meaning “forced labour”. In R.U.R., robots looked exactly like humans on the surface but operated completely differently.

DOMIN: Good. You can tell them whatever you want. You can read them the Bible, logarithms, or whatever you please. You can even preach to them about human rights.

HELENA: Oh, I thought that . . . if someone were to show them a bit of love—

FABRY: Impossible, Miss Glory. Nothing is farther from being human than a Robot.

HELENA: Why do you make them then?

BUSMAN: Hahaha, that’s a good one! Why do we make Robots!

FABRY: For work, Miss. One Robot can do the work of two and a half human laborers. The human machine, Miss Glory, was hopelessly imperfect. It needed to be done away with once and for all.

Can machines really think?

The rise of digital computers in the 1940s marked the beginning of practical experiments in artificial intelligence. In 1950, Alan Turing proposed his famous Turing Test (originally called the Imitation Game) to determine whether a machine was demonstrating human-level intelligence.

The question “Can machines think?” was flawed according to Turing. The terms “machine” and “think” don’t have clear definitions which makes the question an inadequate measure of intelligence. Instead, the Imitation Game is based on the premise that an intelligent machine should be indistinguishable from a human.

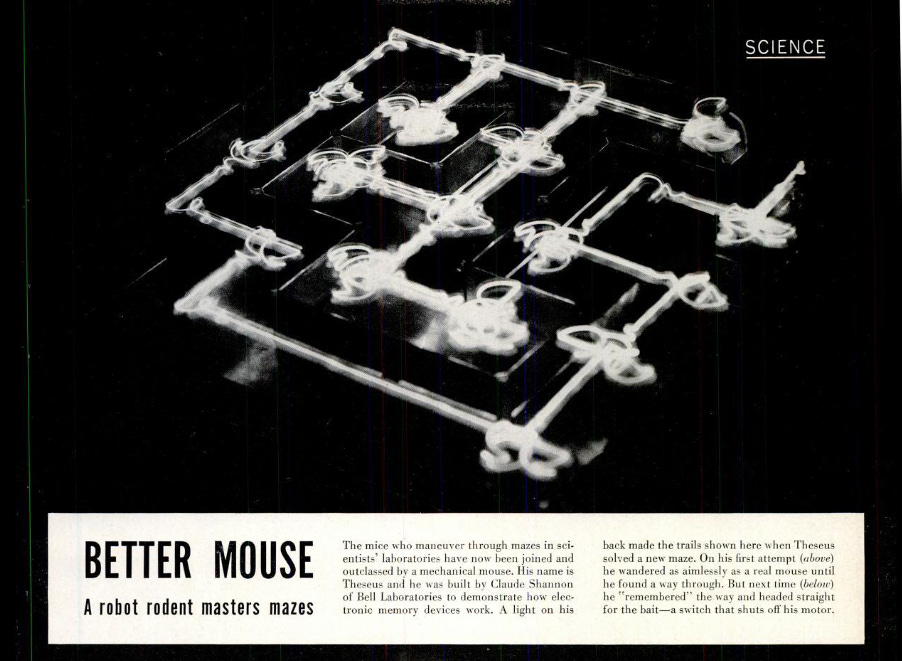

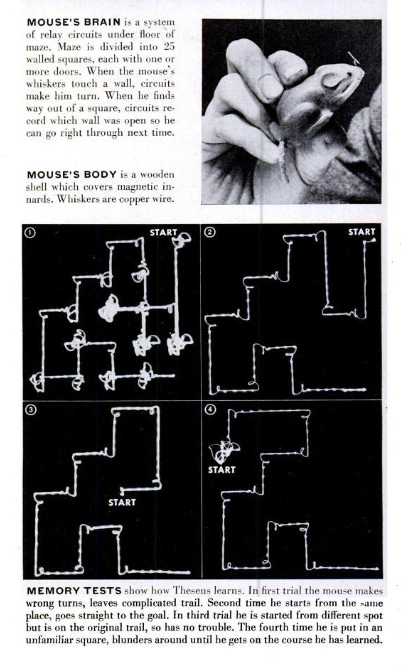

One of the earliest examples of intelligent systems was the mechanical mouse Theseus built by Claude Shannon at Bell Laboratories in 1952. Theseus navigated a maze on its own through trial and error, and was impressive enough to be featured in LIFE Magazine’s July 1952 edition:

The Dartmouth Summer Research Project

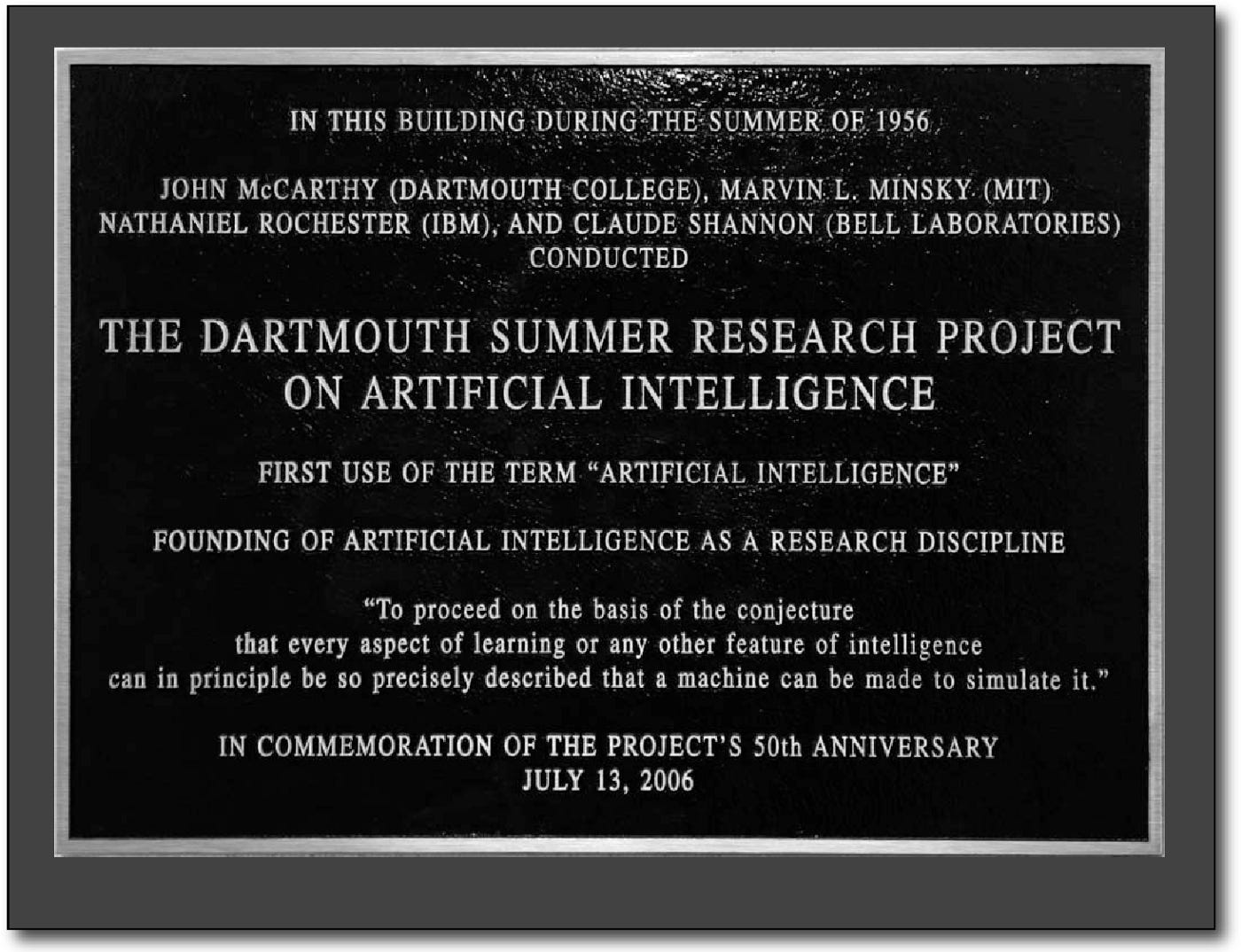

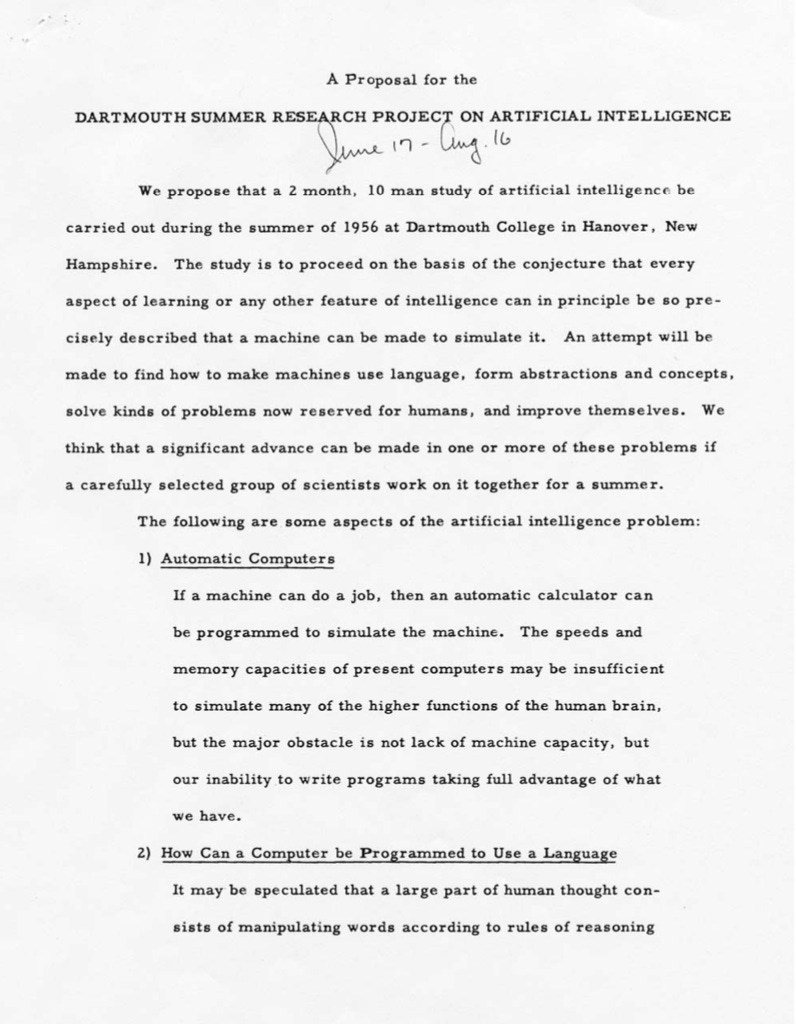

The real canon event for AI came in 1956, when Claude Shannon spent the summer at Dartmouth College with fellow computer scientists John McCarthy, Marvin Minsky from MIT, and Nathaniel Rochester from IBM.

The term “Artificial Intelligence” was officially adopted then, and the AI field was officially born.

The goal was simple:

The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.

An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.

(Read the full proposal here)

The Honeymoon Phase

The 1950s and 1960s were a period of great excitement and rapid progress in AI.

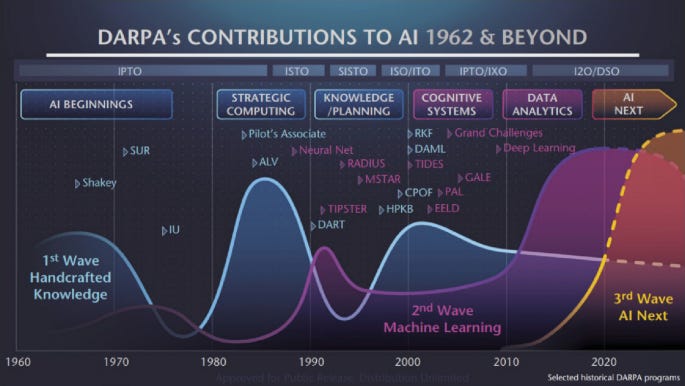

With substantial funding from the U.S. Department of Defense through DARPA, researchers developed a wide variety of intelligent systems:

1956: Allen Newell, Herbert A. Simon, and Cliff Shaw built Logic Theorist, a reasoning program that could prove mathematical theorems.

1958: John McCarthy created LISP, a programming language that would become fundamental to AI research.

1959: Arthur Samuel, who developed the the first self-learning checkers program, coined the term "machine learning".

1965: Edward Feigenbaum and Joshua Lederberg created the first "expert system" called DENDRAL designed to replicate human decision-making.

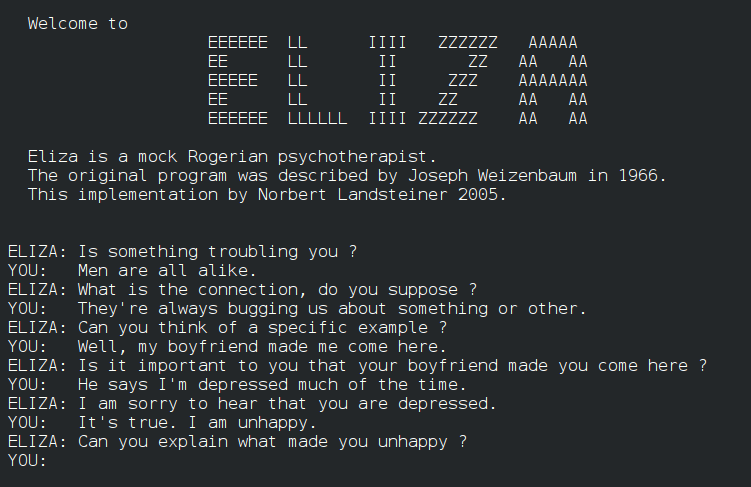

1966: Joseph Weizenbaum introduced ELIZA, the first chatbot, which used natural language processing to converse with humans.

1968: Alexey Ivakhnenko developed the Group Method of Data Handling (GMDH) which laid the foundation for Deep Learning.

Failures and the first AI Winter

With excitement, hype, and generous funding comes sky-high expectations.

J.C.R. Licklider, the head of DARPA’s Information Processing Techniques Office at the time, played a key role in backing AI researchers and their ambitions. Licklider also pioneered fundamental ideas that would shape research in AI and computing in his 1960 paper Man-Computer Symbiosis.

In the anticipated symbiotic partnership, men will set the goals, formulate the hypotheses, determine the criteria, and perform the evaluations. Computing machines will do the routinizable work that must be done to prepare the way for insights and decisions in technical and scientific thinking.

– J.C.R. Licklider in Man-Computer Symbiosis (1960)

Hope and optimism quickly transformed into disappointment in the late 60s and early 70s.

Two critical government reports dealt a devastating blow to the AI field and sent the industry into the first AI winter:

The ALPAC Report: In 1966, a committee of scientists selected by the U.S. government evaluated the progress in machine translation and concluded that it was more expensive, less accurate, and slower than humans. Machine translation was a top priority for the U.S. to translate Russian communications during the Cold War.

The Lighthill Report: Across the Atlantic Ocean in 1973, Sir James Lighthill delivered a similar report to the British Government. He concluded that AI failed to achieve its “grandiose” objectives and was unlikely to do so in the foreseeable future.

“Most workers in AI research and in related fields confess to a pronounced feeling of disappointment in what has been achieved in the past twenty-five years. […] In no part of the field have the discoveries made so far produced the major impact that was then promised.”

– Sir James Lighthill, Artificial Intelligence: A General Survey (1972)

Funding was significantly down, but the AI field’s heart was still beating slowly… and it would rise again.

Read Part 2:

📖 Stories of the week

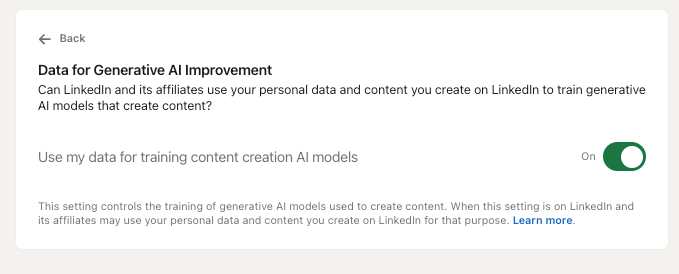

🤬 LinkedIn faces a major AI opt-in backlash

For whatever reason, LinkedIn thought it would be a good idea to automatically opt-in all its users in allowing their content to be used for AI training. It’s surprising to see LinkedIn, owned by Microsoft, adopt such an approach after the similar backlash that Adobe got earlier this year for doing the same thing.

The good news is that you can easily opt-out by following this link.

🤖 OpenAI launched a preview of its highly-anticipated o1 model

OpenAI finally released a preview of its highly-anticipated o1 model. Unlike its previous models, it spends more time “thinking” through a problem before responding which results in better outputs.

There’s one caveat, however. The details behind the reasoning steps aren’t fully displayed, and the model costs more to run because it generates more output tokens for its “thinking” steps. And if you’re wondering why “o1” isn’t called “GPT-5”, here’s OpenAI’s answer:

[…] But for complex reasoning tasks this is a significant advancement and represents a new level of AI capability. Given this, we are resetting the counter back to 1 and naming this series OpenAI o1.

🤖 More from Year 2049

AI for non-technical people course: I’m still working on my “What you need to know about AI” course for non-technical people. I underestimated the time it took to make a great course, but you can join the waitlist to be the first to hear about it. The course will be free FYI.

🔮 The future is too exciting to keep to yourself

Share this post in your group chats with friends, family, and coworkers.

If this was forwarded to you, subscribe to Year 2049 for free.

Great work! I loved learning more about the history of AI, and I found the quotes from R.U.R really interesting. To me it does seem like there has been progressive prioritization of cheap labour over the years in contrast to traditional values of honesty and dedication. And Good call out about LinkedIn, I hadn't heard about that yet.

AI Superpowers by Kai-Fu Lee !!