Automate the doing, not the thinking

"The enemy of knowledge isn't ignorance, it's the illusion of knowledge"

Hey friends,

I’m back from a long and much-needed vacation with my family. I’ll be back in your inbox regularly starting this week, and I’ll be sharing more details about the new product I’m building in the upcoming weeks.

So many of you sent me positive feedback on my recent post (The AI-first trap), so I wanted to keep sharing similar writing to get us thinking about AI in a more rational and responsible way instead of getting carried away by the hype. Don’t forget to share feedback using the link at the bottom of this post.

Let’s get into it.

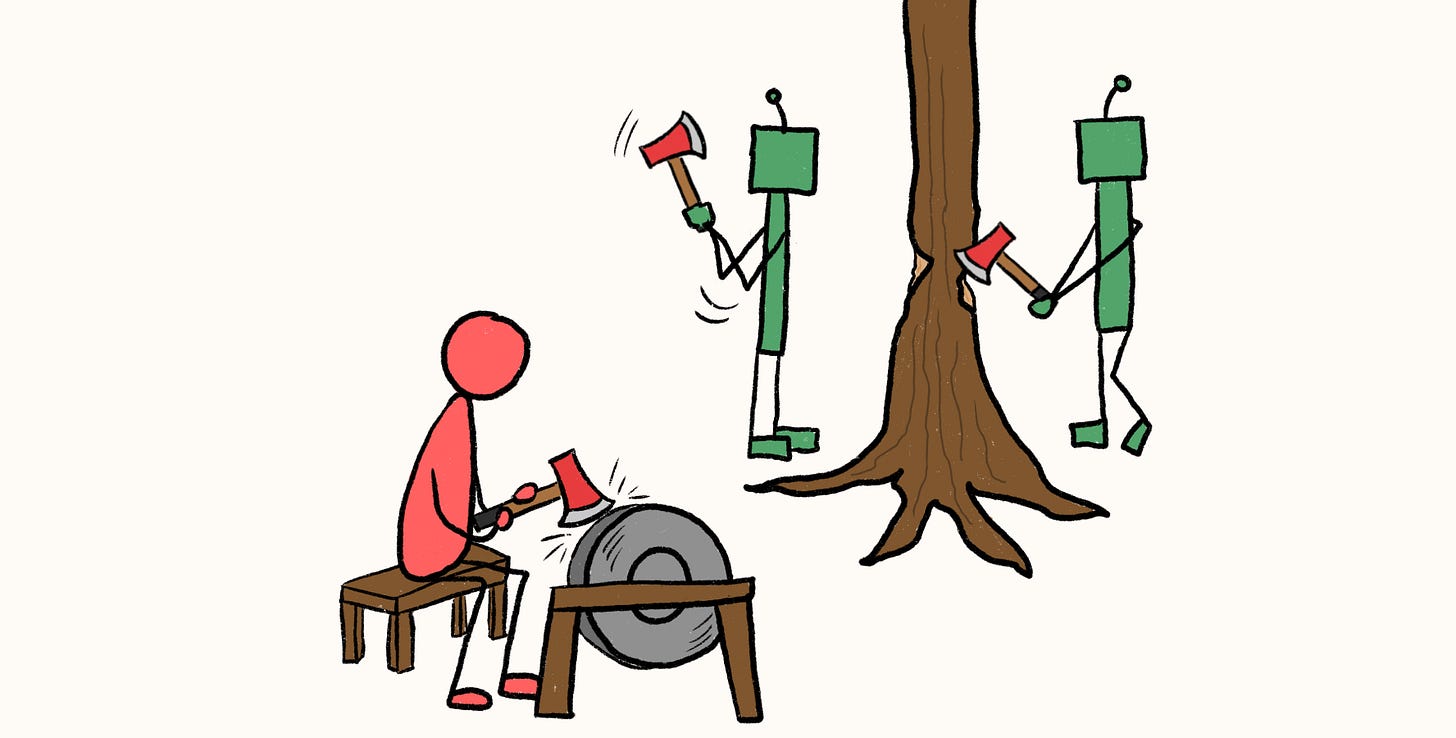

Give me six hours to chop down a tree and I will spend the first four sharpening the axe

– Abraham Lincoln

Thinking & Doing

If we had to classify the tasks we do every day, we could give them one of two labels: “thinking” or “doing”.

“Thinking” is when we pause, observe, plan, evaluate, and make decisions. It happens in our head, on a notebook, or by talking to people around us. Thinking helps us determine the best actions to reach a specific goal.

“Doing” is the act of executing our action plan and making changes in our environment to reach our goal. It’s about creating/changing a piece of work, giving/following orders, buying/selling something, and even choosing to do nothing at all.

Thinking is deciding what matters. Doing is executing it.

A familiar example is grocery shopping. We think about what we want to buy by deciding what meals we’re going to cook this week, checking our fridge and pantry, identifying missing ingredients, and making a shopping list. We then go to the grocery store and buy what we need.

Imagine going to the grocery store without thinking of what we were going to cook or looking inside our fridge and pantry. We would buy things we already have, forget what we’re missing, and collect random ingredients that we can’t make a meal out of. A few days later, we’re on Uber Eats with a sense of disappointment and frustration.

In grocery shopping and in any other situation, thinking would’ve saved us time, money, and emotional damage.

AI and the automation of thinking

“The enemy of knowledge isn’t ignorance, it’s the illusion of knowledge.”

(Source: Unknown)

Up until a few years ago, thinking took time. There were no shortcuts.

Technology usually speeds up the “doing” part of things. Machines made it easier to build and assemble things. Computers made us write, organize, and find our work faster. The internet enabled real-time sharing, communication, and collaboration with people across the world.

We still had to think of what to create, build, write, and communicate.

AI changed this.

For the first time, we had tools that could “automate” our thinking. Instead of taking a step back to think about a problem we’re facing, whether at work or our personal life, we could simply ask this new “intelligent” system and get an immediate idea or suggestion.

It sounds harmless. These tools are free and easily accessible, so might as well give it a shot, right?

If something is free, we’re usually paying for it in another way.

Experts debate whether AI is “thinking” like we do or whether it’s just a pattern recognition and generation machine. This sparks an endless debate about metacognition and psychology that I’m not qualified to discuss.

What’s undeniable is AI’s clear shortcomings:

AI loves to agree with you, even when you’re wrong: It tends to agree with you too much, even when you’re wrong. To understand this better, watch my AI Sycophancy explainer.

AI lies to you and it doesn’t know it: By now, most people are aware of AI’s hallucination problems. Unfortunately, AI doesn’t have a self-correction mechanism and this problem is an outcome of how these systems operate. To understand this better, watch my How AI generates text explainer.

AI is biased: The data used to train AI is pretty much everything on the internet. Imagine it’s one gigantic smoothie of text, ideas, opinions, and biases. Like a smoothie with too many bananas in it, you might not notice subtle ingredients that can enrich your thinking and decision-making. To understand this better, watch my How AI becomes biased explainer.

Usually, the word “artificial” tells us something isn’t as good as the real thing. We love real diamonds, grass, plants, flowers, and hair. We shun their artificial counterparts. But in the context of AI, we seem to treat the “artificial” label as a sign of legitimacy and superiority.

We expose ourselves to flawed thinking and decision-making by over-relying on these systems while undervaluing and ignoring our own intelligence.

The WALL•E and Iron Man visions of the future

WALL•E and Iron Man offer extreme yet useful visions of the future depending on how we work and co-exist with AI: one vision of how it can ruin us, and one where it helps us thrive.

The WALL•E future

WALL•E, one of Pixar’s all-time classics, paints a vision of the future where Earth becomes a toxic wasteland because of overconsumption and environmental neglect. All humans have been evacuated aboard a spaceship called the Axiom where they live pathetic and meaningless lives.

On the spaceship, the “Axiom Humans” have become overweight and lazy. They’re glued to hovering recliner chairs, eat junk food all day, watch an endless stream of content, and have robots that cater to their every need.

The vision is extreme, but elements of it feel familiar to our reality: a society driven by overconsumption and a desire to delegate as much as possible to machines. Ironically, WALL•E shows a future where humans become more robotic, and robots become more human.

WALL•E shows the extreme dumbification of society at the cost of extreme convenience.

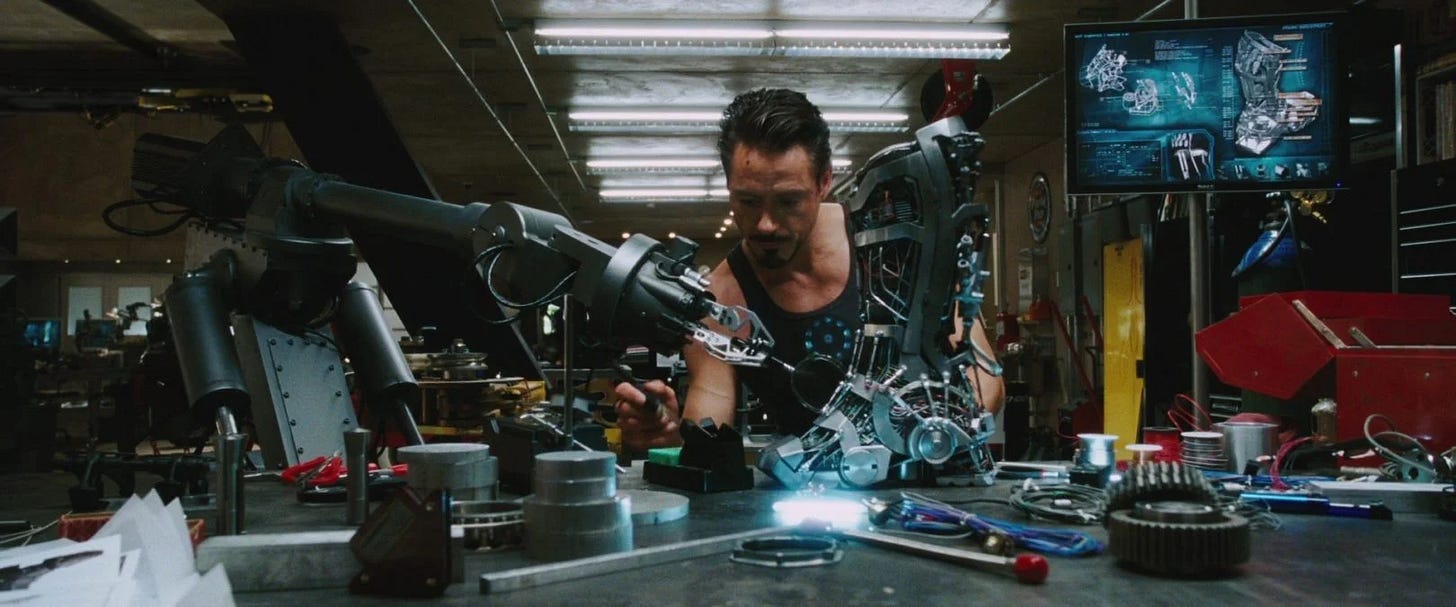

The Iron Man future

Iron Man offers a more optimistic and exciting vision.

If you rewatch any Iron Man movie, you’ll notice the subtle lessons behind Tony Stark’s interactions with JARVIS, his AI assistant. Stark doesn’t use JARVIS to replace him so he can sit on a beach all day sipping margaritas. Instead, JARVIS is the sidekick to support and accelerate the “doing” part of his work.

After all, the Iron Man suit was a result of Tony’s thinking while being held hostage in a cave. He didn’t need shiny technology to come up with a revolutionary idea. All he had was a box of scraps and a brain filled with imagination, knowledge, and expertise.

Even when he returned home and built better versions of the Iron Man suit, Tony still used JARVIS to automate the “doing”, not the “thinking”. JARVIS quickly designed suits based on Tony’s specs, swapped elements and made variations based on his feedback, and built them while he was away.

Think Slowly, Act Quickly

Our brain’s default mode of operation makes these challenges harder to overcome. But understanding how our own brain works can help us avoid making mistakes with AI.

In his book “Thinking Fast and Slow”, Daniel Kahneman discusses our two modes of cognitive processing: System 1 and System 2 thinking.

System 1 is the fast, automatic, and intuitive mode of thinking that works with little to no effort.

System 2 is our slow, deliberate, and analytical mode of thinking that requires intentional effort.

Another way to think of these is unconscious thinking (System 1) and conscious thinking (System 2), also known as the Dual Process Theory.

💡 Further reading: System 1 and System 2 Thinking by The Decision Lab

One of his arguments is that our brains are wired to preserve energy and follow “the path of least effort”. System 2 wants to preserve energy whenever possible, and we only activate when we really need to. We make the biggest mistakes when we rely on our fast and intuitive thinking (System 1) too much. Our intuition comes up with plausible answers, but often incorrect ones.

AI created a new “path of least effort” for thinking. It gives the illusion of a System 2 thinking machine, but shares more flaws with System 1 thinking: it comes up with plausible answers that prioritize speed over accuracy, with a dash of hallucinations, bias, and sycophancy.

Our own cognitive biases make these problems harder to spot:

Authority bias is our tendency to be influenced by “authority figures”. Companies keep advertising AI models as smarter, record-breaking, benchmark-setting, and “approaching AGI”. The average consumer might interpret AI claims and headlines as “AI is smarter than anyone I know, so it’s going to give me the best answer possible in a few seconds”. Deceptive marketing has made people internalize this belief.

Confirmation bias is our tendency to favour information that supports our existing beliefs. This creates an unhealthy loyalty to a tool, because it makes us feel better and boosts our ego. Friction creates discomfort and dissatisfaction, even when it’s beneficial for us. A recent example of this is GPT-5’s release: OpenAI made it less agreeable than GPT-4o and users perceived it as “worse”, even if it scored higher on technical benchmarks.

Thinking is more valuable than ever

AI can generate almost anything for insignificant fractions of a dollar. The cost of “doing” has never been been lower.

But when the cost of doing becomes negligible, careful thinking becomes priceless.

Sure, anyone can create mediocre art, books, and apps. But making remarkable art, books, and apps is still harder than ever. I would be a hypocrite if I said I never used AI to get an idea or to think through a problem. But I treat its answers like a suggestion, not a prophecy.

We can maintain our intelligence, agency, and quality of work by simply reminding ourself to use AI as a tool for doing rather than a replacement for thinking.

The winners of the future are those who will still think slowly, deeply, and carefully, while using AI as a conduit to bring their own ideas to life.

Sharpen the axe, always.

Share this post with someone

Share this post with friends, family, and coworkers.

If you’re not a free subscriber yet, join to get my latest work directly in your inbox.

⏮️ What you may have missed

If you’re new here, here’s what else I published recently:

You can also check out the Year 2049 archive to see everything I’ve published.

I love how you clearly and succinctly pinpoint the major pitfalls of AI (and I’ve seen so many of us falling into them!) Thanks for this great article!

Anyone using LLMs or thinking about it should definitely check out this article!