🎢 AI's Dark Winter Rollercoaster

Part 2 of 3: The two AI winters and how the industry became an embarrassment (and almost taboo)

Hey friends 👋

This is Part 2 of my mini-series on the history of AI. If you missed Part 1 last week, you can still read it here.

Enjoy,

– Fawzi

Today’s Edition

Main Story: AI’s Dark Winter Rollercoaster

Stories of the week: Meta Connect announcements, big changes at OpenAI

Bonus: A giveaway from NVIDIA

Read Part 1: Sparks of Intelligence

Part 2: AI’s Dark Winter Rollercoaster

“Don’t make promises you can’t keep” is timeless advice.

Unfortunately, the AI researchers of the 1950s made ambitious promises and DARPA said “take my money” without hesitation.

“Machines will be capable, within twenty years, of doing any work a man can do.”

– Herbert A. Simon in 1958

“From three to eight years we will have a machine with the general intelligence of an average human being.”

– Marvin Minsky in 1970

But the ALPAC report (1966) and Lighthill report (1973) highlighted how the AI field failed to live up to its promises after spending millions of dollars over 20 years of research and experimentation.

The First AI Winter

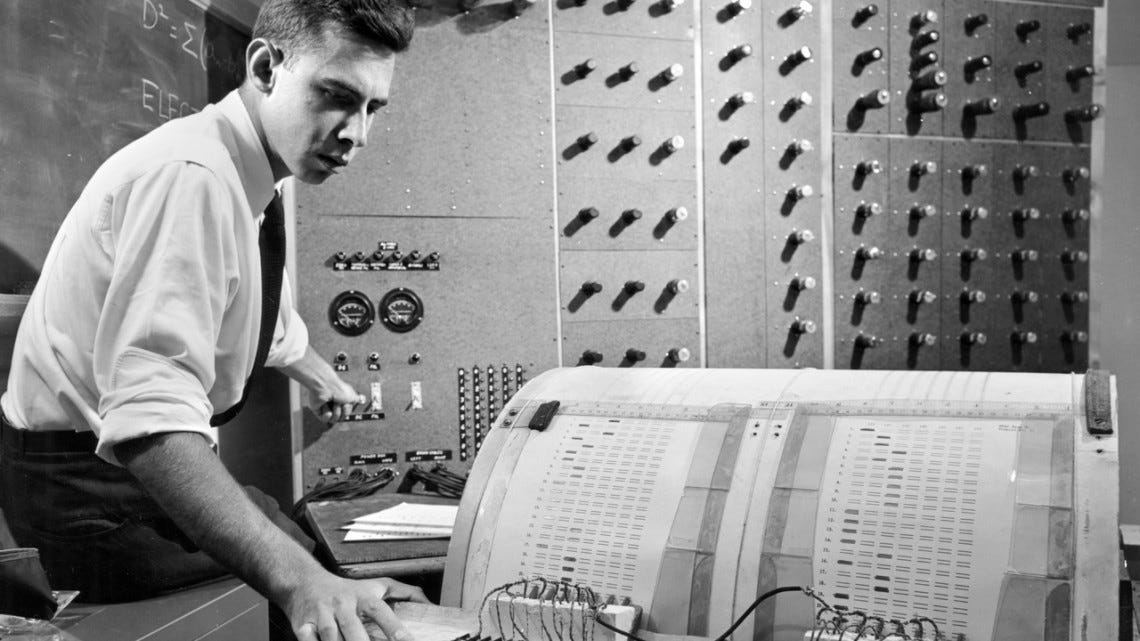

J.C.R. Licklider, head of DARPA’s Information Processing Techniques Office, initially wanted to “fund people, not projects”. In other words, he gave computer scientists like Marvin Minsky and Herbert Simon the freedom to spend research funds however they wanted.

After underwhelming results, DARPA modified its funding strategy.

"Many researchers were caught up in a web of increasing exaggeration. Their initial promises to DARPA had been much too optimistic. Of course, what they delivered stopped considerably short of that. But they felt they couldn't in their next proposal promise less than in the first one, so they promised more”

Hans Moravec to Daniel Crevier, AI researcher and author of “AI: The Tumultuous History of the Search for Artificial Intelligence”

Funding moved towards projects with immediate military applications. This dried up funding for many AI research projects, forcing some computer scientists to abandon the field altogether.

Another source of breakdown in the field was a division between two schools of thought on how to build AI systems:

Symbolic AI (aka the “top-down approach”) advocated for the use of rules, symbols, and logic to explicitly program intelligent systems. Decision trees and expert systems are examples of this approach.

Connectionist AI (aka the “bottom-up approach”) promoted the idea of letting machines learn from data and identify their own patterns, instead of explicit programming. This area was focused on machine learning, neural networks, and deep learning.

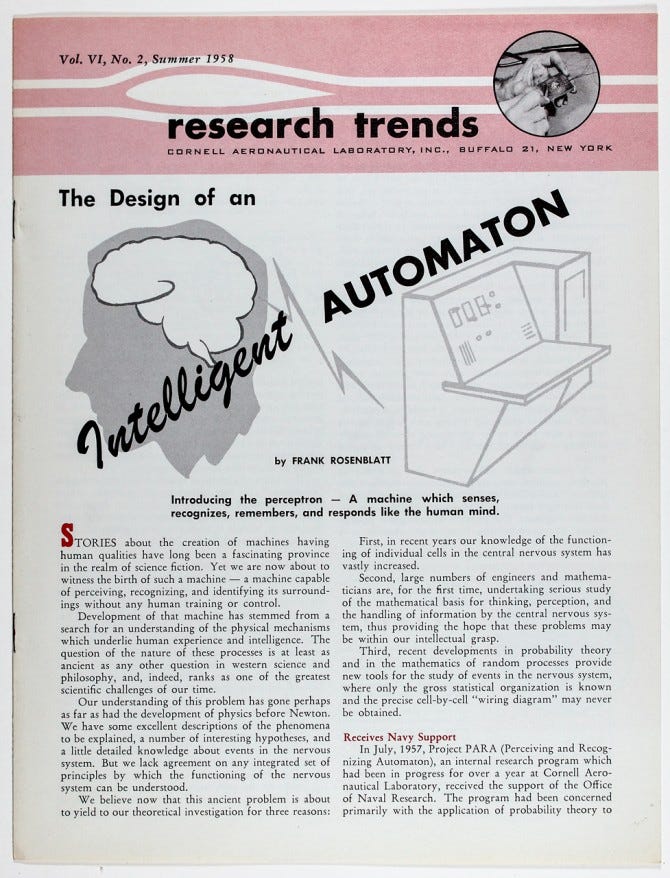

Just like Drake and Kendrick Lamar in 2024, there was beef between Marvin Minsky, who advocated for Symbolic AI, and Frank Rosenblatt who promoted the Connectionist approach.

The debate was so intense that Marvin Minsky, along with Seymour Papert, published a book called Perceptrons (1969) where he criticized Rosenblatt’s work on perceptrons, a type of neural network used for image classification tasks. How savage.

The Perceptrons book had a devastating impact on research funding and interest in neural networks, and Connectionist AI as a whole.

The Resurgence

Funding was significantly down, but the AI field wasn’t dead.

Some AI researchers were still working quietly in the shadows. It wasn’t until the 1980s that interest in AI surged again.

One of the pivotal creations in AI’s history is an expert system called XCON. An expert system is a type of program that can simulate the decision-making of a human expert following a set of rules.

Launched in 1980, XCON had the ability to check sales orders and design computer layouts based on a customer’s requirements. In its first 6 years, XCON processed 80,000 orders with 95% accuracy while saving its company $25 million per year.

AI had finally shown its commercial value.

But nothing gives people more motivation than the fear of falling behind.

In 1982, Japan launched its Fifth Generation Computer Systems initiative to advance its computing and AI capabilities. The US and the UK, fearing Japanese dominance in computing, were quick to react and launched their own research programs in 1983:

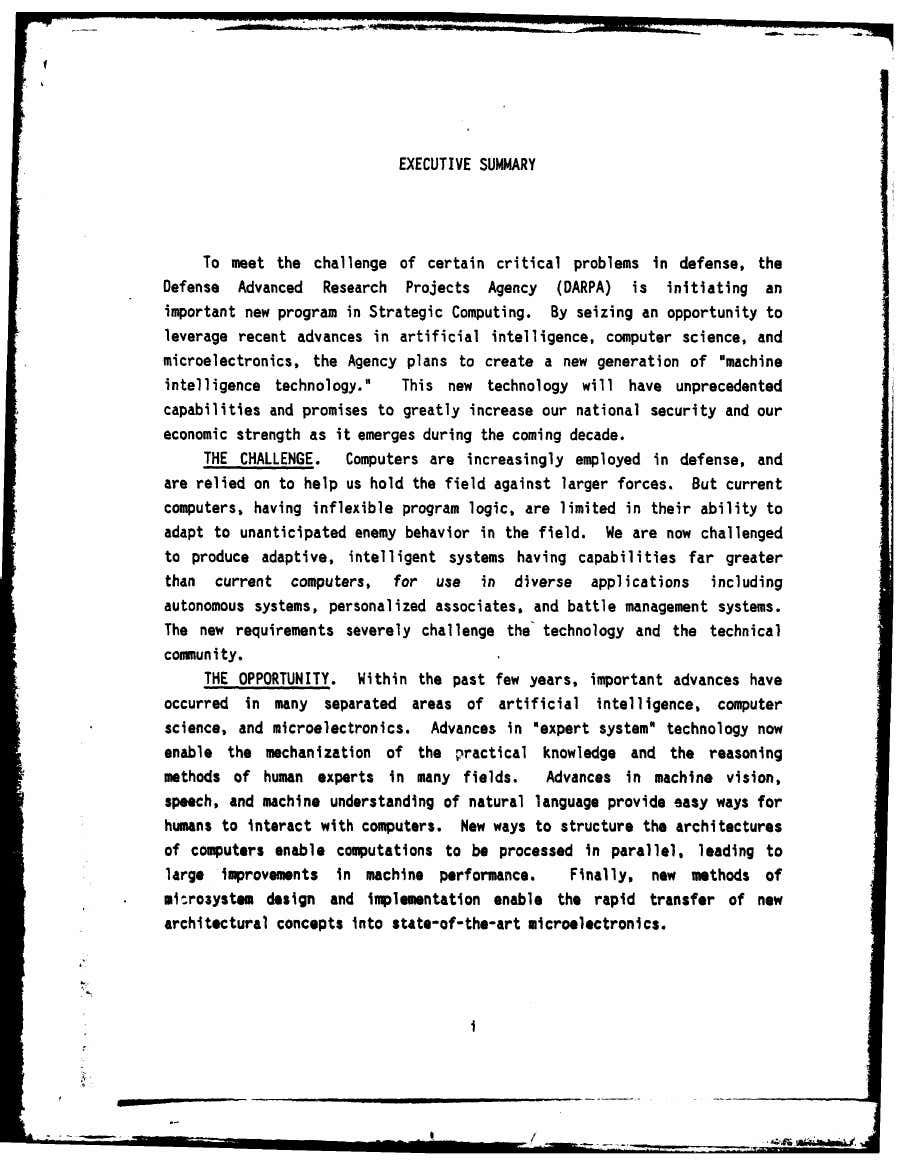

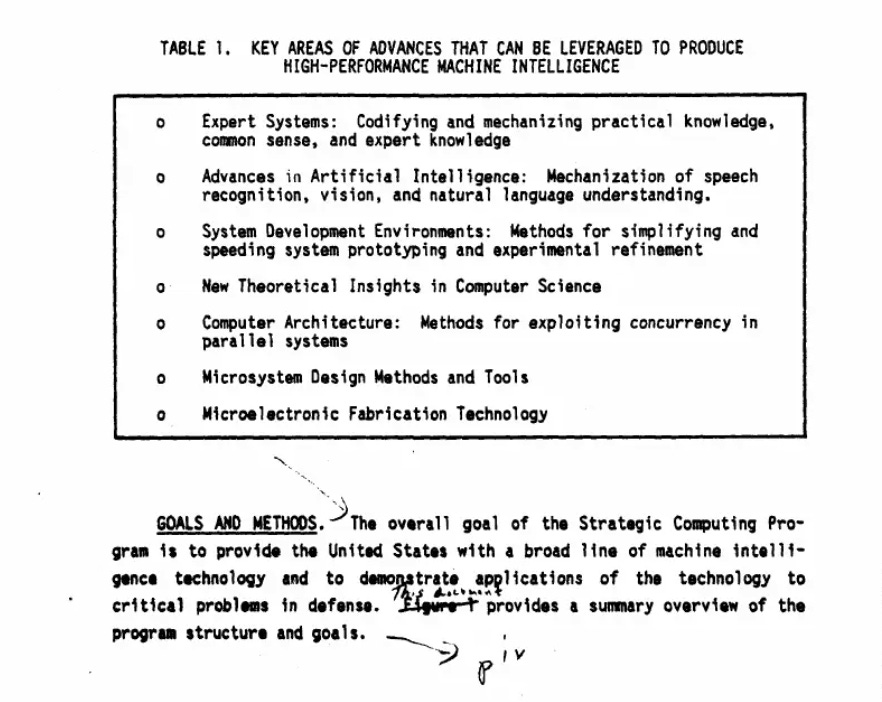

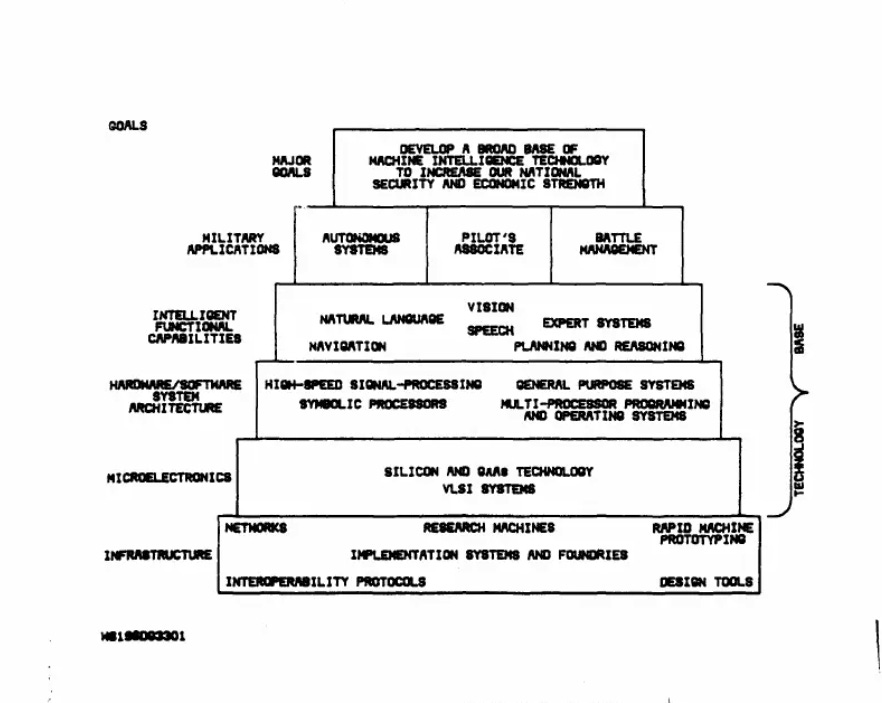

DARPA launched its Strategic Computing initiative, on which they would spend close to $1 billion until 1993.

The UK’s Alvey Programme provided around £350 million in funding across various areas of research, including AI.

DARPA’s goal was still very much focused on immediate military applications, but they had regained hope in AI after the success of expert systems like XCON. Snippets from the original Strategic Computing document reflect this renewed faith:

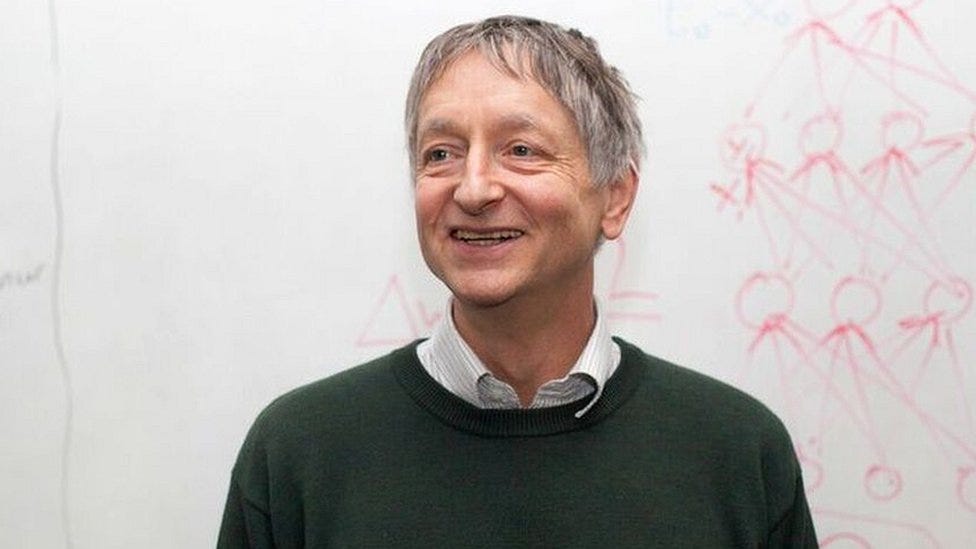

While the Symbolic AI philosophy became the prevailing one with the success of expert systems, some researchers still believed in the Connectionist approach. Enter: Geoffrey Hinton.

Hinton, along with fellow researchers David Rumelhart and Ronald J. Williams developed the backpropagation algorithm (1982) which became an essential component of improving a machine’s ability to learn with neural networks.

He opposed DARPA’s focus and funding on immediate military applications of AI, so he moved from the US to the University of Toronto in 1987:

“I came to Canada because I like the society here and because they have very good funding for basic research. It’s not very much money, but they give it for basic curiosity driven research as opposed to big applications”

– Geoffrey Hinton in an interview with Global News

When I attended Geoffrey Hinton’s talk at Collision Conference a few months ago in Toronto, he was still just as opposed to military applications of AI as he was in the 1980s.

The Second AI Winter

It didn’t take long for governments to lose faith in the AI industry… again.

By the late 80s and early 90s, none of the three government programs had made any significant strides towards their AI objectives and aspirations.

The most surprising contributor to AI’s second winter is, ironically, the rise of personal computers built by Apple and IBM.

Up until 1987, the AI field was using LISP machines, a type of computer that was specialized in running AI programs. LISP was the preferred programming language of AI researchers at the time. The problem was that LISP machines cost a fortune. A fully-equipped one would cost you $50k-$150k.

At the same time, Apple and IBM were building personal computers that were cheaper, more powerful, and more useful. They could also run LISP programs, making specialized LISP hardware obsolete. Major LISP hardware companies like Symbolics and Lisp Machines Inc. quickly went bankrupt and the LISP market completely collapsed.

Even XCON, the expert system that reignited interest in the AI field, had lost its status as the golden child of AI applications. As a “symbolic AI” system, XCON had over 2,000 rules programmed into it which simply made it too difficult and expensive to update and maintain.

AI became an embarrassment. Researchers even avoided using the words “artificial intelligence” because of its association with past failures, empty promises, and unmet expectations. Can you believe there was a time where saying “AI” was almost taboo?

It’s ironic when companies can’t seem to finish a sentence without mentioning AI these days.

Since we have the luxury of living in the present, we know that AI would eventually rise again. But this time, it would keep rising to the highest levels of hype, public and private funding, consumer interest, practical applications, but also, criticism and opposition.

To be continued in Part 3, next week.

Further Reading

If you enjoyed today’s story, here’s some bonus material to read about some of the topics I mentioned:

📖 Stories of the week

🤖 Meta makes some big announcements at their Meta Connect event

Meta was busy this week. It announced a new (and much cheaper than an Apple Vision Pro) mixed reality headset, a new version of its AI model Llama 3.2, updates to its AI-powered Ray-Ban glasses, and a preview of its first AR glasses Orion

OpenAI’s leadership is changing quickly. CTO Mira Murati announced she’s leaving the company last week. This follows high-profile exits of Greg Brockman (President) and other leaders over the past year. It’s also in the process of raising $7 billion at a $150 billion valuation. Rumours are also flying about OpenAI’s possible transition from non-profit to for-profit corporation known as a “benefit corporation”. If you’re wondering what that means exactly, here’s parts of the definition:

Benefit corporation status allows corporations to opt-out of shareholder primacy and opt-into stakeholder governance. With stakeholder governance, a company is required to take into consideration anyone that is materially affected by that company’s decision-making, like workers, customers, local communities, wider society and the environment.

A benefit corporation is a traditional corporation with modified obligations, committing it to higher standards of purpose, accountability and transparency

🤖 Bonus

🎁 A giveaway from NVIDIA

Master in-demand skills in AI, data science, accelerated computing, and more through NVIDIA's specialized training and certification, enhancing your professional knowledge and industry recognition.

Instructions:

Take a free or paid self-paced course from the AI learning essentials or the Gen AI and LLM learning path. You can use the code “FAWZI” for a 10% discount off any paid course.

Fill out this form to be entered in a giveaway to receive a self-paced course for free!

The deadline is October 18, 2024 and the winner will be announced and contacted shortly after that.

🔮 Share Year 2049

Share this post in your group chats with friends, family, and coworkers.

If this was forwarded to you, subscribe to Year 2049 for free.